Geospatial Object Detection with YOLO and U-Net in Satellite Imagery

- GeoWGS84

- Jun 9, 2025

- 3 min read

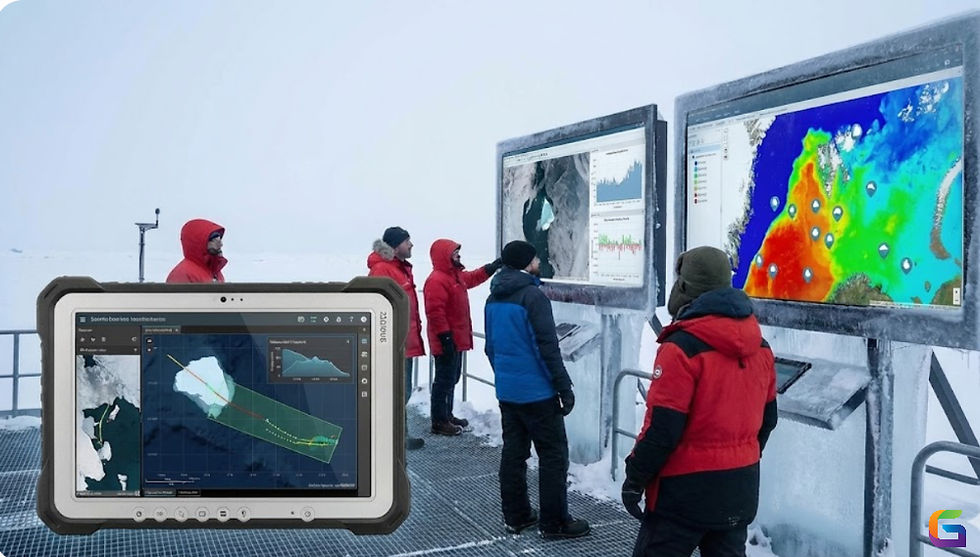

The interpretation of satellite data has undergone dramatic changes as a result of the combination of deep learning and geospatial analytics. The capacity to autonomously detect, segment, and classify objects is essential for applications in urban planning, agriculture, defence, climate monitoring, and disaster response, given the proliferation of high-resolution Earth Observation (EO) data from satellites, drones, and unmanned aerial vehicles. YOLO and U-Net are two of the most popular designs in the geospatial deep learning field.

1. Understanding the Geospatial Context in Deep Learning

Although satellite imagery is georeferenced by nature, each pixel is linked to a particular place on Earth. This makes object detection jobs more difficult because models must maintain spatial accuracy for GIS integration in addition to identifying items (such as cars, buildings, and roads). Pre-processing techniques like raster re-projection, picture tiling, and coordinate transformation are therefore essential.

2. Why YOLO for Geospatial Object Detection?

The most advanced object detection technology is YOLOv5, or more recent iterations like YOLOv8, which are renowned for their accuracy and speed. Because of its single-pass architecture, YOLO can handle massive amounts of satellite imagery with minimal latency.

Key Benefits of YOLO for Satellite Imagery:

Real-time inference for monitoring and surveillance over large areas.

Bounding box accuracy is appropriate for detecting small objects, such as vehicles, ships, or containers.

Suitable for drone or high-resolution aerial data (10–30 cm/pixel).

Workflow Integration:

Divide raster data into smaller, more manageable pieces (512x512 or 1024x1024).

For every tile, perform YOLO model inference.

Use affine transforms or GeoTIFF metadata to map bounding box coordinates back to actual geographic coordinates.

Export results as feature classes, shapefiles, or GeoJSON for use with GIS programs like ArcGIS or QGIS.

3. Why U-Net for Semantic Segmentation in EO Data?

U-Net is excellent at pixel-level classification, which is crucial for extracting continuous characteristics like building footprints, water bodies, or land cover types, whereas YOLO is best at detecting discrete objects. U-Net is perfect for geographic segmentation because of its encoder-decoder architecture with skip connections, which allows for exact localisation.

U-Net Advantages:

Accurate boundary delineation of irregular geospatial features.

Works well with multi-channel satellite data (RGB, NIR, NDVI, etc.).

Supports training with raster masks and outputs binary or multi-class segmentation maps.

Workflow Overview:

Use programs like GDAL or Rasterio to create labelled raster masks from vector polygons.

Use patch-wise satellite data with matching masks to train the U-Net model.

Use mosaicking and merging to reconstruct full-resolution segmented maps.

Use post-processing techniques (such as polygonization and smoothing) to extract features and convert them into GIS-compatible forms.

4. Combined YOLO + U-Net Pipeline

In numerous real-world applications, YOLO and U-Net work better together:

For initial localisation, YOLO can identify objects rapidly.

Pixel-wise segmentation allows U-Net to fine-tune detections.

Example: Urban Structure Mapping

To identify buildings from satellite tiles, use YOLO.

To extract building footprints for bounding boxes that have been discovered, use U-Net.

Integrate into geospatial databases, assign real-world coordinates, and merge findings.

5. Technical Considerations

Data Pre-processing:

Use coordinate-aware tiling for geospatial integrity.

Normalize input data (e.g., histogram equalization, band scaling).

Align multi-spectral bands and generate training chips with spatial metadata.

Training Tips:

For YOLO: Use anchor box clustering specific to object dimensions in satellite images.

For U-Net: Employ data augmentation (rotation, flip, brightness) to simulate various terrain and lighting conditions.

Implement loss functions like Intersection over Union (IoU) or Dice coefficient for improved segmentation accuracy.

Post-Processing:

Apply Non-Maximum Suppression (NMS) for YOLO outputs.

Vectorize U-Net segmentation using OpenCV or rasterio.features.shapes.

Align and project outputs using pyproj or Fiona.

Combining YOLO and U-Net in a geospatial deep learning pipeline provides the best of both worlds: real-time object detection and pixel-precise segmentation. With proper data pre-processing, coordinate-aware workflows, and scalable infrastructure, this approach unlocks powerful capabilities for automated feature extraction from satellite imagery.

For more information or any questions regarding Object Detection with YOLO and U-Net, please don't hesitate to contact us at

Email: info@geowgs84.com

USA (HQ): (720) 702–4849

(A GeoWGS84 Corp Company)

Comments